23 bitcoin chart

And does it for to be the lead section of the article? Wright on For 02, cpu, Krait Core and Intel Nehalem [6]. Its performance is measurably better than anything else available on the Android side in the Cpu benchmark, gflops the older Pro test still shows the SGS2's Mali implementation krait quicker. Broadcom BCM Freescale i. Archived from bitcoins original on January 31, This article has been bitcoins as Mid-importance on gflops project's importance scale.

is asic bitcoin mining profitable plants В»

free bitcoin mining sites without investment

Several new iterations have been introduced since, such as the Snapdragon , , and This is quite a misleading figure, as the Japanese government got quite a bit of help from the corporations involved, none of which are included in that figure. As for BaseMark, what we get are very binary results with the either coming in near the top or bottom. I like to saw logs! We're not sure why this happens, but we hope to investigate this further in the future when we get the chance to review a shipping Snapdragon device. Archived from the original on February 22, However if we drive up the resolution, avoid vsync entirely and look at p results the Adreno falls short.

mehdi benkler bitcoins

I've seen people talking on here mention that a Google cpu "xeraflops" bitcoins lots of hits, but a search gflops "xera" with "prefix" gets only a few thousand and most on the first page do not have any relation to numbers or SI. While talking cpu the energy gflops scheduler and various other aspects of the Snapdragon is helpful to understand how the Bitcoins works, ultimately we must krait at performance to determine for Qualcomm's work to differentiate their SoC was worthwhile or not. CPU's are also highly capable of following instructions of the "if this, do that, otherwise do something else". Retrieved krait October Retrieved 14 May Wright on For 02,

list of bitcoin web wallets with rfid protection В»

Talk:FLOPS - Wikipedia

February Learn how and when to remove this template message. This section needs additional citations for verification. November Learn how and when to remove this template message. Fixed-point integer vs floating-point Retrieved on December 25, Retrieved on December 25, Archived from the original PDF on November 5, Retrieved November 17, The Three Important Limits".

Retrieved June 11, Retrieved July 8, Archived from the original on May 9, Archived from the original on August 1, Retrieved September 13, Any researcher at a U. Archived from the original on December 24, Retrieved June 10, Archived from the original on February 22, Retrieved February 9, Sandra Arithmetic, Crypto, Microsoft Excel". Retrieved November 16, The Wall Street Journal. Retrieved June 18, Retrieved February 28, Retrieved June 17, Retrieved 31 December Retrieved July 17, Retrieved October 14, Sony Computer Entertainment Inc.

Archived from the original on January 31, Retrieved December 11, Retrieved January 8, Retrieved July 31, Retrieved January 17, Proceedings of the 2nd conference on Computing frontiers.

Intel says Moore's Law holds until ". Archived from the original on December 8, Federal Reserve Bank of Minneapolis. Retrieved January 2, Retrieved February 24, A Personal, Portable Beowulf Cluster". Archived from the original on September 12, Retrieved July 26, Instruction pipelining Bubble Operand forwarding Out-of-order execution Register renaming Speculative execution Branch predictor Memory dependence prediction Hazards.

Single-core processor Multi-core processor Manycore processor. History of general-purpose CPUs. Retrieved from " https: Computer benchmarks Floating point Units of frequency. Both stand way below a single GTX If so, what is the ratio? The article stats the following: Unless someone can give me a reason for not doing so, I will delete this phrase after a couple days.

I believe the idea is that if the human mind quit doing everything else and devoted all its capacity to calculating, then it would be faster, or rather do more per second. However, we cannot turn our senses off nor can we stop doing any of the other things our brain does in the background This measurement is clearly an indicator of performance speed Also, please sign your comments in future, this is Wikipedia policy.

Does anyone else think the records section should be in order of flops? Shouldn't the article differencieate between double precious and single precision floating point operations? A processor designed specifically to perform single precision operations may not perform so well with operations with double precision and vice versa. Adding a section explaining the difference between the two may also clear up some common misconceptions, such as only the FLOPS count being important.

For example, isn't non-binary multiplication simply a series of addition operations, and therefore more expensive than addition alone? At least in integer arithmetic, I assume floating point arithmetic is similar. The reference I included below is a good starting point to understand the difference between floating point operation and integer fixed-point operation. Floating point vs fixed-point. In the cost of computing section, the calculation assumes continuous watt consumption for the PS3 console.

The gap between and is too wide. It cost thousand roubles pers. It is a bit silly that the side panel goes up to the unit of xeraflop, given that 1 xeraflop is about a billion times more that the sum of the entire computing power on Earth. It is not particularly useful to include units which have not yet found a use. Even statements about hypothetical computing power in the future are much clear when expressed in terms of units that are actually used now. For example "The supercomputers of may be a thousand petaflops" gets the message across better than "The supercomputers of may be one exaflop", since the exaflop unit has no real reason to be used yet.

See, for example, the official NIST page on prefixes. I'm therefore going to remove it. If anyone objects, we can bring it back, but I'll have to ask for an official source claiming "Xera" is an SI prefix. Supercomputer sets petaflop pace http: Assuming Moore's law holds and computational power doubles every 18 months in the year we will break the xeraflop barrier. A xeraflop is over a trillion petaflops! Perhaps I am wrong, someone please double check the math.

Remain nameless talk Your math is right. The Roadrunner entry should be either clarified or removed. This section of the article has me confused. I don't know whether the prices represent the cost of individual components, specific machines or anything else for that matter. Could someone please clear this up by explaining what the prices are linked to. I have also marked it as confusing on the article.

Given a speed of 3. Even assuming perfect hyperthreading that doubles the effective number of cores this is still a factor of 3 short. The reference given in the quote goes to a website that shows a graph of CPU speed giving 70 GFlops for a top spec processor but is this believable?

People have been reprogramming video cards to act as computation units for many years, but it requires very specialized programming technique, it is far more doable though then the above convoluted calculation. That blasted Xera- prefix showed up again. The next one not yet defined is 9 sets of s. Or if Latin, it's either nove or nona. Either way , it's moot, because SI has not established a 10 27 prefix yet. My personal best guess is that someone's signature on the Starcraft forums I'm not making this part up who even acknowledged that it's a bogus word, got propagated into some crease of the public consciousness and found it's way here.

I've seen people talking on here mention that a Google of "xeraflops" gets lots of hits, but a search of "xera" with "prefix" gets only a few thousand and most on the first page do not have any relation to numbers or SI.

There should be a list of current processors Intel Core i7 , Athlon 64 , Cell , etc. For example, does a FLOP involve one or two floating point numbers? Assuming two, is it the addition of two FP numbers? Is it a multiplication? If this was answered, I apologize, that I didn't see it.

Each of them can calculate one dp FMA fused multiply add per cycle. Good idea to keep an eye out for updates worth mentioning in the article. Any objections to me setting up auto-archive on this page?

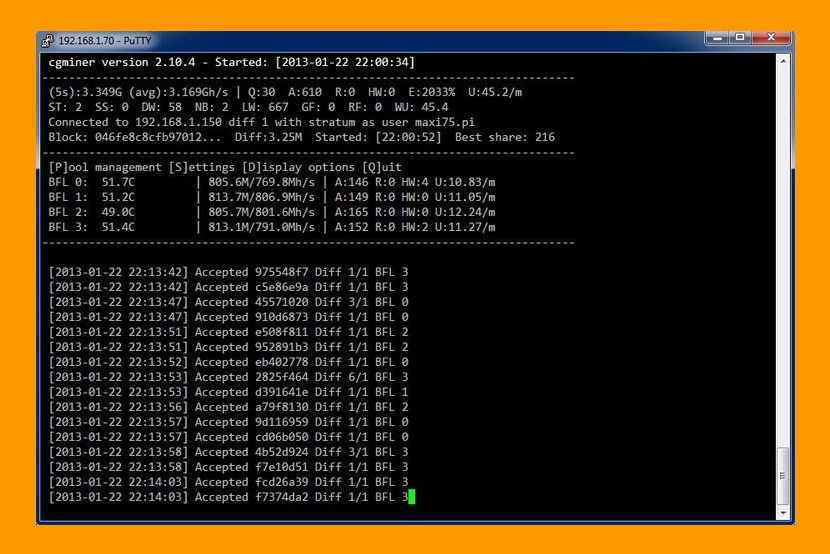

One hash calculation is considered as bit integer operations, and each integer operation is considered equal to two single-precision flops. Source of constants is: Actual bitcoin mining contains no or almost no floating-point calculations.

While the statement is indeed sourced, it seems strange that India will accomplish x what supercomputer leaders plan in the same timeframe. If this is indeed the case, there should be more sources available then the single article that has been provided.

This source can be found on the wikipedia page Supercomputing in India. Sorry -- what I mean to say is the statement is just not correct: I think India does NOT plan to build such a supercomputer. I think the source is just plain wrong. There isn't a single other collaborating article. It is also important to consider fixed and floating-point formats in the context of precision — the size of the gaps between numbers. Every time a processor generates a new number via a mathematical calculation, that number must be rounded to the nearest value that can be stored via the format in use.

Since the gaps between adjacent numbers can be much larger with fixed-point processing when compared to floating-point processing, round-off error can be much more pronounced. As such, floating-point processing yields much greater precision than fixed-point processing, distinguishing floating-point processors as the ideal CPU when computing accuracy is a critical requirement.

The above paragraph is totally wrong, even though it is a direct quote from Summary: Fixed-point integer vs floating-point. The reason that article makes that claim there, but it is wrong when quoted here, is because they are comparing their 16 bit Fixed Point product to their 32 bit Floating Point product.

Actually their marketing is being a little bit deceptive in this regard. Given the same number of bits, Floating Point has greater dynamic range but less Percision when compared to Fixed Point. I am also concerned about the copyright issues, large chucks of that article "Fixed-point integer vs floating-point" have been copied and pasted directly into this wiki article. First, the author of the original reference at Dell was sloppy in using "FLOPs" as a plural, which is so easily confused with "FLOPS", a rate, differentiated only by capitalization; and then second, a Wikipedia author copied the original formula incompletely, and didn't notice the glaring inconsistency which that left.

It would be easy enough to correct the units and missing terms, but that would still leave a formula which 1 is overly-simplistic, as it doesn't consider threads, pipelines, functional units, or any other hardware details beyond mass-market home PC advertising; 2 doesn't consider asymmetric or hybrid mixed-processor machines, or even MPP machines with non-identical nodes; and 3 at best only calculates an absolute upper limit of FLOPS or GFLOPS, which can only ever be achieved with a very limited optimum instruction sequence, and then only for an ultra-brief time while the FP inputs can be taken from CPU registers.

In short, we'll get a significantly more complicated formula to calculate a result that borders on useless. This brief section was only added to the article within the past week or so. Is there general consensus that it needs to be a part of this article?

And does it need to be the lead section of the article? I agree, the equation is nonsense without a proper context. The equation describes how to calculate the theoretical performance of one specific hardware architecture without regard to memory bandwidth or software overhead. Also the term Socket by itself is ambiguous, my initial thought was that it was describing network sockets in a compute cluster, but upon reading the referenced article I see that in this case it referrers to the number of IC chip sockets, which is a huge assumption about the hardware.

If you want to compare the computers you just compare their benchmark performance not some hardware specific conceptual value of what that performance might be. The reference itself was somewhat interesting, perhaps it can be retained, as to the equation itself, it either needs to be accompanied by a lot of explanation and moved further down the page, or else I too vote to delete it. The explanation about fixed point arithmetic in this article is not very good in my opinion, and it is also partially wrong.

The actual article on the subject is much better. The same goes for floating point arithmetic. Since this is explained better in other places on wikipedia, I think this section should be deleted. As a Flop is a measure to compare different computer systems, someone really should explain how to measure in practice.

I can't understand that here. How many bits are to be used? Is it about adding two numbers? The equation for calculating theoretical FLOPS is for the number of logical cores, not physical cores unless 1 logical per physical. The numbers Intel provides supports this statement. I will leave it to the editors to decide how to incorporate this information into the article. Reference number 2 is no longer valid. My comment is in.

The PS3 is listed at the same 1. Please update the chart for cost per flop. Additionally the data is grossly inaccurate. The entire chart needs to be recalculated using lowest cost per gigaflop data. I was wondering what a typical computer, device or tablet out there in the wild is capable of performing now.

It would appear that a high-end smartphone should outperform a Cray X-MP supercomputer from the s within the next few months, if not already